- Get link

- X

- Other Apps

This is a quick-and-dirty post to explain in more detail (and hopefully in a more intuitive way) the recent findings published in the paper "What do Deep Networks Like to See?". TLDR: some state-of-the-art models use way less information from the input than initially thought. By measuring how much info they process, we can explain findings in the literature that were previously unaccounted for. We can also establish a hierarchy of models that use more or less information wrt. other models.

After publication a nice discussion in this Fast.AI forum dug into the origins of the checkerboard artifacts and I added some points in that regard at the end of this post.

The quick rundown

Let's first have a look at the main findings of the paper:

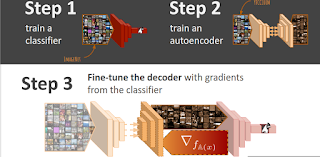

- We start by training an autoencoder (AE) that reconstructs images almost perfectly (it models the identity \(\mathbb{A}(x) \approx x\) with an unsupervised MSE loss).

- If reconstructions are good, evaluating classification accuracy for Imagenet (using a pre-trained classifier) should yield similar results when using original images or the corresponding AE reconstructions.

- We found that accuracy drops quite a bit (2.83 percentage points for ResNet-50) when using reconstructions. This lower accuracy indicates that the original information contained in the image that was lost/perturbed by the AE reconstruction, was actually useful for the pre-trained classifier.

- We let the decoder of our AE update its parameters by passing the image through the AE and into the classifier; computing the classification loss (supervised) and backpropagating all the way to the decoder. We only update the decoder's parameters though.

- Measuring accuracy again with the new fine-tuned AE shows values that are comparable to the ones using only the classifier with original images (accuracy is sometimes a bit higher but most importantly, not lower than when original inputs are used).

- Looking at the reconstructed images of the fine-tuned AE, we see that constant "checkerboard-like" artifacts appear in the reconstructions. "Constant" here means that values at certain pixel positions seem to be the same, regardless of the image being reconstructed (which in turn means that the pixel value is constant regardless of the class that it is associated with).

- We measure how "constant" pixel positions end up being by measuring the mutual information between two random images (intra-class nMI). Intuitively, we should not be able to predict the value of a pixel position in IMAGE_1 based on the pixel at the same position of a second randomly selected IMAGE_2 (except for random chance which is very close to 0). As soon as the intra-class nMI goes up in this case, we can conclude that there are more values that can be predicted between two random images. Hence, exposing evidence of a constant pattern in the reconstructions.

- We found that the intra-class nMI does indeed go up in most cases; most prominently for AlexNet and ResNet50. These results led us to the conclusion that said networks are discarding quite a bit of information in the prediction step (given that their accuracy is as high as with the original images). Again, we call this phenomenon a "drop in information" because of how constant the patterns are i.e., if certain pixel positions have the same value regardless of the image, those pixel positions add no information regarding the class associated with the image.

Discussion:

"Artifacts are caused by deconvolutions":

"Artifacts are caused by deconvolutions":

- There is a well known issue with deconvolutions when striding has not been chosen carefully, causing artifacts that look quite similar to the ones found in our work. However, it is important to note that SegNet is not using deconvolutions (also known as transposed convolutions) but unpooling. This way of doing upsampling is less prone to said artifacts. Furthermore, we see how after the initial (unsupervised) training of the AE, said checkerboard patterns are not present. Moreover, those checkerboard patterns are not really present even after running the fine-tuning step to VGG16 or Inception v3 suggesting that the appearance of said checkerboard artifacts are not a direct byproduct of the decoder's architectural design.

- Most importantly, it is worth noting that the size, shape or color of the constant artifact does not have a direct impact on the paper's main finding, which focuses on just the existence of a pattern that is constant throughout the entire dataset, and that it does not negatively affect the classification performance.

The improved performance is due to the decoder:

- That's indeed true :) The only component that changes during the fine-tuning step while the classification loss is being optimized is the decoder so, in a way, the decoder is the responsible for improving the performance.

- Again, this does not contradict any claims in the paper nor it undermines the conclusions: the paper doesn't portrait the proposed architecture as a mean to improve performance (we were certainly seduced by the idea) but we would need to run more and different kind of experiments before claiming that. Instead, we highlight the restored levels of accuracy as a guarantee that whatever signal is left in the reconstructed images is as useful as the original image (because the classification accuracy is at least as good).

- An interesting byproduct of this argument is that pre-pending the finetuned AE seems to be a beneficial addition to the classification pipeline in general (computationally expensive for sure, but beneficial nonetheless). Networks do not trivially get better by naively adding more filters or more layers, nor do they seem to improve by prepending a good AE; finetuning the AE as we proposed does seem to improve accuracy most of the times though. Again, this is just a byproduct of the argument that the AE does improve classification, the paper does not claim it.

If you have any more comments, suggestions, thoughts, questions, ideas, etc, please write down in the comments below or get in touch. I'll try to address most burning/critical/recurrent questions as soon as possible.

- Get link

- X

- Other Apps

Comments